Artificial intelligence (AI) has led to the development of sophisticated conversational systems known as chatbots. These AI-powered programs can provide information, answer questions, and even complete tasks. Chatbots are increasingly common in customer service, healthcare, and education; however, in education, chatbots have been used to generate false or misleading information called “hallucinations” and create fake students.

Chatbot hallucinations in higher education are caused by the complex nature of educational queries and the diverse range of topics encountered. Community college chatbots need to understand a wide range of academic subjects, courses, and student queries. This is different from generic applications where chatbots may only handle customer service inquiries.

Ambiguity in educational queries

Students often ask complex and context-specific questions about course requirements, program details, and academic pathways. The inherent ambiguity in these queries can challenge chatbots, leading to misinterpretations and, subsequently, hallucinated responses. For instance, a student inquiring about the prerequisites for a specific course may provide incomplete information, triggering a chatbot hallucination if the system fails to infer the intended meaning accurately.

- Data bias and inconsistencies: The reliance on educational databases and resources for chatbot training data introduces the risk of bias and outdated information. Inaccuracies in the training data, whether reflecting biased perspectives or containing outdated facts, can contribute to the generation of hallucinated responses. Chatbots must navigate a vast array of academic subjects, making it crucial to address bias and ensure the accuracy of information embedded in their knowledge base.

- Human-chatbot interaction dynamics: The unique dynamics of human-chatbot interactions further complicate the issue of hallucinations. College settings foster collaborative learning environments, where students engage in dynamic discussions and group activities. Chatbots operating in such settings must navigate the complexities of ambiguous queries arising from collaborative interactions, increasing the risk of misinterpretations and subsequent hallucinations. Additionally, feedback loops in educational contexts where students inadvertently provide incorrect information during interactions with chatbots can reinforce inaccurate patterns and perpetuate hallucinations in subsequent interactions.

Implications of chatbot hallucinations in higher education

The implications of chatbot hallucinations extend beyond the general concerns seen in broader applications. In the educational domain where precision and reliability are paramount, the consequences of misleading information can significantly impact students’ academic journeys.

- Academic performance: Misleading information related to course prerequisites or curriculum details can have tangible effects on students’ academic performance. If a chatbot provides inaccurate details about the requirements for a specific course, students may enroll without the necessary preparation, potentially leading to suboptimal academic outcomes.

- Career guidance: Hallucinated responses regarding career advice or program recommendations can misguide students, influencing their educational and professional trajectories. Inaccurate guidance may lead students to pursue paths that are not aligned with their interests or long-term goals, hindering their overall development.

- Application processes: Chatbots often assist students with inquiries about application procedures, deadlines, and required documentation. Inaccurate information in these critical areas can result in students missing opportunities or facing unnecessary challenges during the enrollment process. The potential for confusion and frustration among students underscores the importance of mitigating hallucinations in these specific contexts.

Growing problems and motives behind bots posing as students

In higher education there is a troubling trend: the use of bots to register as students, particularly in online classes. This may sound far-fetched but it is a reality that colleges and universities are facing today. The motive behind the use of bots is to defraud colleges and universities. By registering for classes without any intention of attending, these bots can inflate enrollment numbers, leading to financial losses for institutions, and universities are still responsible for paying faculty members for the classes even if the seats are filled with bots.

These automated programs are being used for various reasons, ranging from gaining access to popular classes to scamming institutions out of money. According to Tytunovich (2023) in California, over 65,000 fake applications for financial aid were submitted in the state’s community college system in 2021, with one community college identifying and blocking approximately $1.7 million in attempted student aid fraud. The San Diego Community College District was not so lucky and paid out over $100,000 in fraudulent claims before catching on. According to the Chancellor’s Office, about 20% of the traffic coming to the system’s online application portal is from bots and other “malicious” actors (West et al., 2021).

| Feature | Bots | Chatbots |

| Primary function | Automate tasks | Communicate and provide information |

| User interaction | No direct user interaction | Direct user interaction through natural language |

| Typical use cases | Customer service, marketing, social media | Customer service, education, e-commerce |

Potential use and misuse by students

In the article “OpenAI’s Custom Chatbots Are Leaking Their Secrets,” the author discusses how OpenAI’s GPTs give individuals the ability to create custom bots. A more recent development is the creation of custom bots by users. Open AI subscription holders can now create custom bots also known as AI agents. These versatile tools can be tailored for personal use or shared publicly on the web.

The positive use would include the transformation of the online learning experience for students by offering personalized learning support, enhancing engagement and interaction, providing real-time feedback, assisting with study preparation, and offering language support. Despite the potential benefits in classroom settings, their use also raises concerns. These include over-reliance, plagiarism, bias, limited creativity, ethical considerations, accessibility issues, oversimplification, distraction, and dehumanization of the learning experience.

Utilizing CAPTCHA responses to differentiate humans from bots

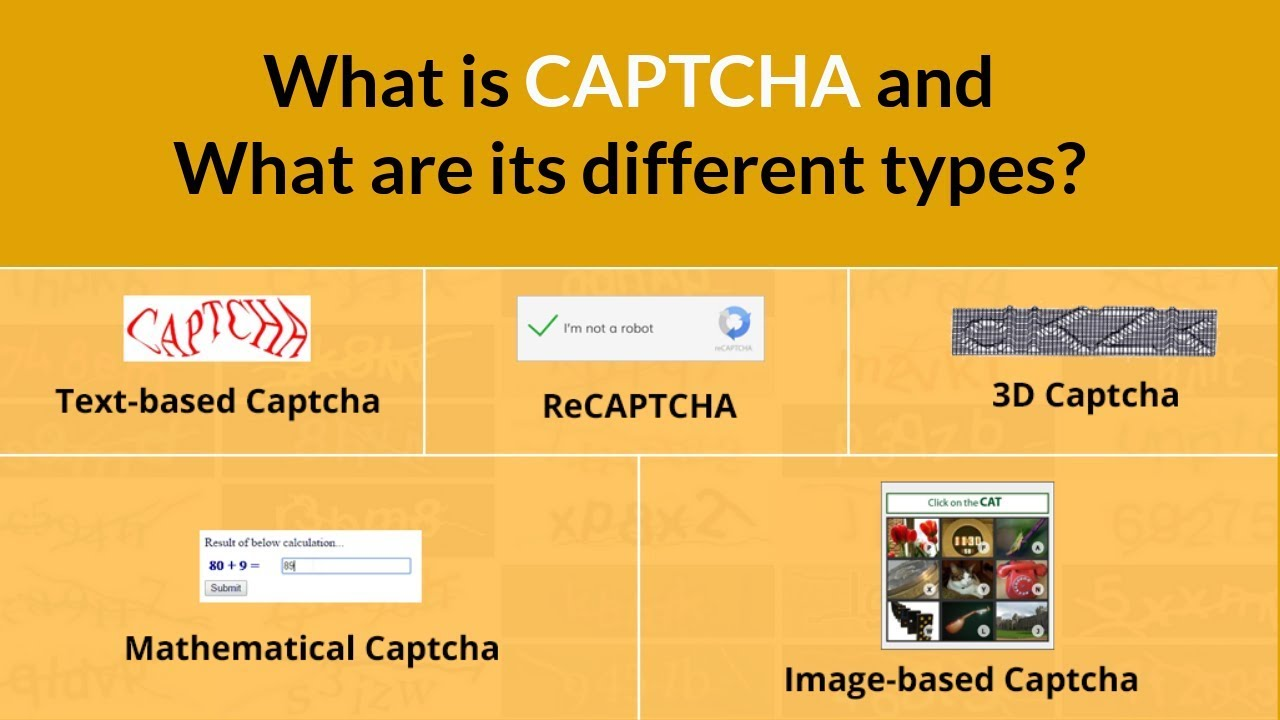

CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) is a widely employed tool for distinguishing between human users and automated programs. By presenting challenges that are straightforward for humans to solve but difficult for bots to overcome, CAPTCHAs can effectively filter out bots and protect online platforms from malicious activity (Stec, 2023).

Common CAPTCHA types

There are various types of CAPTCHAs, each with their strengths and limitations. Some common CAPTCHA types include:

- Text-based CAPTCHAs: These CAPTCHAs display a series of distorted letters or numbers that are difficult for bots to read but easy for humans to decipher. For instance, a CAPTCHA might present a sequence of distorted letters like “594nB” and ask the user to type it out correctly.

- Image-based CAPTCHAs: These CAPTCHAs present a grid of images and ask the user to identify specific objects in the images. For instance, a user might be asked to select all the images containing traffic lights or all the images featuring cats. Image-based CAPTCHAs are particularly useful for individuals with visual impairments as they can utilize audio CAPTCHAs as an alternative.

- Audio-based CAPTCHAs: These CAPTCHAs play a recording of spoken words or numbers and ask the user to type what they hear. This type of CAPTCHA is particularly useful for individuals with visual impairments who may struggle with text-based or image-based CAPTCHAs.

Examples of CAPTCHA challenges

Specific examples of CAPTCHA challenges that can be used to distinguish between humans and bots include:

- Distorted text CAPTCHA: The user is presented with a sequence of distorted letters or numbers and asked to correctly type them out. The distortion makes it difficult for bots to accurately identify the characters while humans can easily read them.

- Object recognition CAPTCHA: The user is shown a grid of images and asked to select all the images containing a specific object, such as cats, traffic lights, or mountains. This challenge relies on human visual perception which bots often struggle with.

- Audio CAPTCHA: The user is played a recording of spoken words or numbers and asked to type out what they hear. This challenge tests the user’s ability to understand and transcribe spoken language, a task that is difficult for bots.

- Tile Sorting CAPTCHA: The user is presented with a set of scrambled tiles and asked to arrange the tiles to form a complete image. This challenge requires spatial reasoning and pattern recognition skills which are not well-developed in bots.

By employing CAPTCHAs in various forms, online platforms can effectively distinguish between genuine human users and automated programs, safeguarding the integrity of their services and protecting against malicious activities.

Figure 2. “What is CAPTCHA and what are its different Types?

Additional considerations for CAPTCHA implementation

While CAPTCHAs are an effective tool for distinguishing between humans and bots, it is important to consider their potential impact on user experience. CAPTCHAs that are too difficult or time-consuming can frustrate users and lead to increased abandonment rates. Additionally, CAPTCHAs should be designed to be accessible to individuals with disabilities, such as those with visual or auditory impairments.

Overall, CAPTCHAs can play a crucial role in protecting online platforms from automated attacks and ensuring that they are used by genuine human users. By carefully selecting and implementing appropriate CAPTCHA challenges, online platforms can balance security with user experience and maintain a safe and reliable environment for all users.

Combating bot misuse in higher education

Faculty can play a crucial role in mitigating the misuse of bots in higher education by implementing proactive measures and fostering a culture of academic integrity. Key strategies include:

- Educating students: Dedicate class time to discuss the impact of bots, outline course policies, and organize workshops on academic integrity.

- Implementing technology-based detection: Collaborate with IT to integrate CAPTCHA challenges and plagiarism detection software. Establish clear reporting procedures for suspected bot usage.

- Designing effective assessments: Emphasize critical thinking, incorporate open-ended questions, and utilize a variety of assessment methods. Implement authenticity checks for online submissions.

- Proactive monitoring: Regularly review online discussions, encourage student engagement, and collaborate with teaching assistants to identify potential bot activity.

- Fostering open communication: Maintain an open-door policy, promote peer support, collaborate with colleagues, and participate in institutional initiatives focused on academic integrity.

- Verification processes: Implement additional verification processes during registration such as requiring a valid student ID or a video introduction. This can make it more difficult for bots to register as students.

- Reporting mechanism: Establish a reporting mechanism where faculty and students can report suspected bot activity. This can help in early detection and prevention of bot-related fraud.

- Policy development: Develop and enforce strict policies against the use of bots in online classes. This could include penalties for students found to be using bots.

By implementing these comprehensive measures, faculty can effectively address the growing challenge of bots and safeguard academic integrity for all students. Remember, the goal is to maintain the integrity of the educational experience for all students. By taking these steps, faculty can help address the issue of bots posing as students and ensure a fair and equitable learning environment.

Dave E. Balch, PhD, is a professor at Rio Hondo College and has published articles in the areas of ethics, humor, and distance education. Balch has been awarded excellence in teaching by the Universities of Redlands and La Verne, and has also been awarded “Realizing Shared Dreams: Teamwork in the Southern California Community Colleges” by Rio Hondo College.

Note: This article was the result of a collaboration between the human author and two AI programs; Bard and Bing.

References

Burgess, M. (2023, November 29). OpenAI’s custom chatbots are leaking their secrets. Wired. https://www.wired.com/story/openai-custom-chatbots-gpts-prompt-injection-attacks/

Google. (2023, March). Google. https://bard.google.com/

Metz, C. (2023, November 6). Chatbots May ‘Hallucinate’ More Often Than Many Realize. The New York Times. Retrieved from https://www.nytimes.com/2023/11/06/technology/chatbots-hallucination-rates.html

Microsoft. (2023, February). Bing. https://www.bing.com/searchform=MY02AA&OCID=MY02AA&pl=launch&q=Bing%2BAI&showconv=1

Stec, A. (2023, May 5). What is CAPTCHA and how does it work?. Baeldung on Computer Science. https://www.baeldung.com/cs/captcha-intro

Tytunovich, G. (2023, October 5). Council post: How Higher Education became the target of bots, fake accounts and online fraud. Forbes. https://www.forbes.com/sites/forbestechcouncil/2023/01/20/how-higher-education-became-the-target-of-bots-fake-accounts-and-online-fraud/?sh=34246b781f62

West, C., Zinshteyn, M., Reagan, M., & Hall, E. (2021, September 2). That student in your community college class could be a bot. CalMatters. https://calmatters.org/education/higher-education/college-beat/2021/09/california-community-colleges-financial-aid-scam/