In the last few weeks, ChatGPT has been one of the main topics of conversation for most people working in education. The bot has raised many questions, to name just a few: how ChatGPT will affect exams, how to help students use it critically and within the boundaries of academic integrity, how to talk about it in class, and whether it can help faculty plan activities or even generate content like examples or cases.

Universities have been deciding how to deal with AI and producing the corresponding policies, mostly responding to plagiarism concerns. But from the individual teacher’s perspective, I think the best approach is to start by having a conversation with the bot: try to find out how knowledgeable it is about your subject, how accurately it answers your questions, how it performs in the activities you set, and how it reacts when challenged. I have done exactly that and this is what happened.

I teach a course for faculty on how to write multiple-choice questions. Professors work with research-based guidelines to write multiple-choice questions for their courses, including questions that target higher-order thinking skills. In my conversation with the bot, I wanted to find the answers to two main questions: “Is ChatGPT able to produce good quality multiple-choice questions, therefore making my course irrelevant?” and “Can ChatGPT be useful to support some parts or activities of the course?”

I started by asking the bot three content-related questions: what guidelines could I use to design effective multiple-choice questions, to choose good distractors (i.e. wrong answers), and to write multiple-choice questions that target higher-order thinking skills. The answers were not as detailed as the content in my course and examples were not provided (I guess further prompting would have been needed), but they were certainly clear and accurate. I asked for some elaboration in one of the points and I got a convincing clarification. So far, so good.

Next, I gave ChatGPT one of the tasks from the first week of the course, which involved improving a series of multiple-choice questions and explaining the changes made. All the improvements the bot suggested consisted of rephrasing the language in the questions, the explanation given for the change was always the same: “Improved clarify and specificity of the question.” Although I would have liked to see more elaborate explanations, in most cases the bot suggested questions that were indeed more clearly written and easier to read. For this reason, I believe that ChatGPT can be helpful when we are struggling to phrase the questions in a concise and unambiguous manner, which is especially relevant in the case of multiple-choice questions. However, an important weakness in the bot’s reply to the activity was that it did not pay any attention to the possible answers to the questions (e.g. need to improve distractors, two or more answers overlapping), a key component of multiple-choice questions.

The next step was asking the bot to generate multiple-choice questions from some input I provided. This is when things started to go downhill. ChatGPT produced a number of questions on the topic I requested, but without indicating which one was the correct answer or why the others were incorrect. I asked for that information and it did reply, but for some questions, apart from the answer that the bot considered correct, there was another possible answer that could also qualify as correct. Surely, ChatGPT recognized its limitations and quickly rectified (“Yes, you are correct. Option D can also be considered a correct answer”), but that means that we would need to be continuously checking and verifying the input it provides, which would take longer than designing the questions ourselves in the first place.

Moreover, there were two specific aspects where the bot performed very poorly. The first one was that in many cases, the correct answers were considerably longer than the incorrect answers, which is precisely one of the issues we should avoid when designing effective questions. The second was that the distractors were frequently of poor quality, meaning that they could easily be disregarded as wrong answers. For example, in the question:

- Which of the following is an example of implementing Multiple Means of Engagement in the UDL Framework?

A) Asking students to complete a worksheet in silence

B) Incorporating videos, audio recordings, and interactive simulations in a lesson

C) Providing students with only written text to understand a concept

A and C would be quickly identified as wrong answers by most people with some basic knowledge of the topic.

Finally, I asked ChatGPT for some literature about multiple-choice questions. It provided me with four sources. Three of them were referenced as chapters from books and a fourth one as a journal article, although publication dates and page numbers were not included, only titles and names of authors. I checked and found out that none of the sources existed, or to be precise, the books and the journal mentioned do exist, but they do not contain those chapters or article. Similarly, the authors mentioned do exist, but they have not written those sources. ChatGPT gave me a fake list of sources.

Going back to my two initial questions, these are my conclusions:

- Is ChatGPT able to produce good quality multiple-choice questions, therefore making my course irrelevant?”

The answer is no, as of now, ChatGPT is not able to generate effective questions. - Can ChatGPT be useful to support some parts or activities of the course?

The answer is yes, it can be useful to improve the writing of the questions.

I found my conversation with the bot very useful. I feel as if I’m in a better position to teach my course next time. I know to what extent and how ChatGPT can help professors write multiple-choice questions, and I know what information to share with them about this. I am reassured about the content, the interactions and the activities I ask faculty to complete in my course. If this had not been the case, I would be able to adjust and improve those aspects. And what would have happened if I had concluded that there was no point in me offering my course anymore? Well, I would be frustrated, for sure, but I might have thought of another course, about the same topic but from a different angle, for example, a course on how to use ChatGPT to create effective multiple-choice questions.

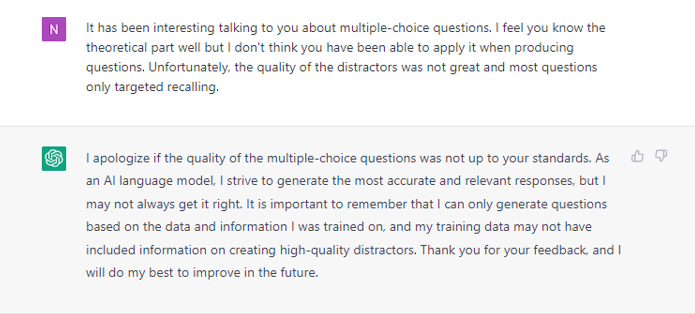

ChatGPT and I ended our conversation with the exchange below. I think its final comment clearly highlights its own limitations.

I am sure the ChatGPT and I will talk again soon. I will not be asking it for sources, though.

Nuria Lopez, PhD, taught at higher education for two decades before moving to a role of pedagogical support for faculty. She currently works as learning consultant at the Teaching and Learning Unit of the Copenhagen Business School (Denmark).