Have your students ever told you that your tests are too hard? Tricky? Unfair? Many of us have heard these or similar comments. The conundrum is that, in some circumstances, those students may be right. Assessing student learning is a big responsibility. The reason we report scores and assign grades is to communicate information about the extent of student learning. We use these indicators to judge whether students are prepared for more difficult work or ready to matriculate into majors or sit for certification exams. Ideally, scores and grades reflect a student’s learning of a particular body of content, content we intended them to learn. Assessments (e.g., tests, quizzes, projects, and presentations) that are haphazardly constructed, even if unintentionally, can result in scores and grades that misrepresent the true extent of students’ knowledge and leave students confused about what they should have been learning. Fortunately, in three easy steps, test blueprinting can better ensure that we are testing what we’re teaching.

Step 1: Align objectives, assessments, and learning opportunities.

Learning results from students’ engagement with course content, not from the content itself (Light, Cox, & Calkins, 2009). However, this is often not how we approach the planning of our courses. In our courses and lessons, we need to make sure that clear learning objectives drive the planning, that assessments are constructed to measure and provide evidence of the true extent to which students are meeting the objectives, and that, through the learning opportunities we provide students, they can engage with the content in ways that allow them to meet the objectives and demonstrate their learning. This is not a linear process—it is iterative, often messy, and shaped by contextual factors. Nonetheless, when alignment is a criterion for successful planning, we are more likely to be measuring what we’re teaching. We do have to start somewhere, and a good place to start is with learning objectives.

Step 2: Write meaningful and assessable objectives.

If objectives drive the assessments and learning opportunities that we create for students, then the objectives must be meaningful (Biggs, 2003) as well as specific and measurable. The objectives are where we establish expectations for student learning. If, for example, we want students to think critically, our objectives must reflect what we mean by critical thinking. What we sometimes lack is specific language. Taxonomies (e.g., Bloom’s Taxonomy, Anderson & Krathwohl, 2001; Fink’s Taxonomy of Significant Learning, 2013; Wiggins and McTighe’s Facets of Understanding, 2003; Biggs’ Structure of Observed Learning Outcomes, 2003) can be consulted to help craft the specific objectives to which we will teach.

We recommend crafting no more than 5–8 course learning objectives. The format of the objectives should follow this example: Upon successful completion of this course, students will be able to evaluate theories through empirical evidence.

Step 3: Create test blueprints.

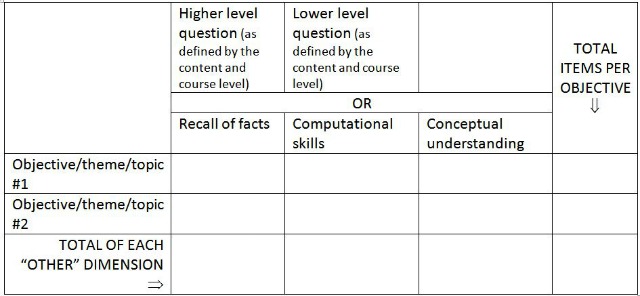

Designers of any major high-stakes exam (e.g., SAT, GRE, NCLEX) have to be able to claim that it tests what it purports to test. One way they do this is by building a test blueprint, or table of specifications. A test blueprint is a document, matrix, or other kind of chart that maps each question on an assessment to its corresponding objective, theme, or topic. If it doesn’t map, it’s not included in the assessment. A completed map represents how the items of an entire assessment are weighted and distributed across objectives, themes, or topics as well as how they are distributed across other important dimensions (e.g., item difficulty or type of question). A test blueprint is, essentially, a tool to help align assessments with objectives.

Course instructors also need to be able to assert that assessments provide evidence of the extent to which students are meeting the established objectives. If the blueprint doesn’t represent the content that is being tested, adjustments should be made before administering the test. A test blueprint is easy to develop and flexible enough to adjust to just about any instructor’s needs.

Consider the template below. The left-hand column lists—for the relevant chunk of content—objectives, themes, and topics. Column heads can represent whatever “other” dimensions are important to you. For example, in a political science course you could map higher level versus lower level items. Or, in a statistics course, you could map question categories such as recall, skills, and conceptual understanding. Once the structure of your blueprint is established, (a) plot each item with the numbers in the cells representing the numbers of items in each of the intersecting categories; (b) total the rows and columns; and (c) analyze the table and make sure the test will well represent student learning, given the objectives and students’ learning opportunities for that content.

As you develop the “map” of your assessment, consider these questions: What does each column heading mean? For example, what does “higher level” versus “lower level” really mean? Do you know? Would students know? Would student learning be improved if you shared the blueprint in advance? And, ultimately, will this planned assessment represent what you taught, what you intend to test, and how you intend to test it?

References:

Anderson, L. W., & Krathwohl, D. R. et al. (Eds.) (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. Boston, MA: Allyn & Bacon.

Biggs, J. B. (2003). Teaching for quality learning at university. London: Open University Press.

Fink, L. D. (2013). Creating significant learning experiences for college classrooms: An integrated approach to designing college courses. San Francisco: Jossey-Bass.

Light, G., Cox, R., & Calkins, S. (2009). Learning and teaching in higher education: The reflective professional, 2nd ed.

Wiggins, G., & McTighe, J. (2005). Understanding by design. Upper Saddle River, NJ: Merrill Prentice Hall.

Cindy Decker Raynak is a senior instructional designer at the Schreyer Institute for Teaching Excellence at Penn State University. Crystal Ramsay is a research project manager of faculty programs at Penn State University. Their session titled “Are You Testing What You’re Teaching” was one of the top-rated workshops at the 2016 Teaching Professor Conference.

© Magna Publications. All rights reserved.